As a web developer and website owner, one of my primary responsibilities is ensuring the smooth operation of my client's online presence. Recently, I faced a frustrating issue with one of my clients' WooCommerce websites — excessive bandwidth consumption that was slowing down their site.

At first, I assumed that bad bots were attempting to attack the website, which made me extremely concerned. To make matters worse, the hosting provider was of little help. I requested they enhance security measures, but their response was less than satisfactory. Their support was unhelpful, and I was left to tackle the problem on my own.

The Issue: Excessive Bandwidth Usage

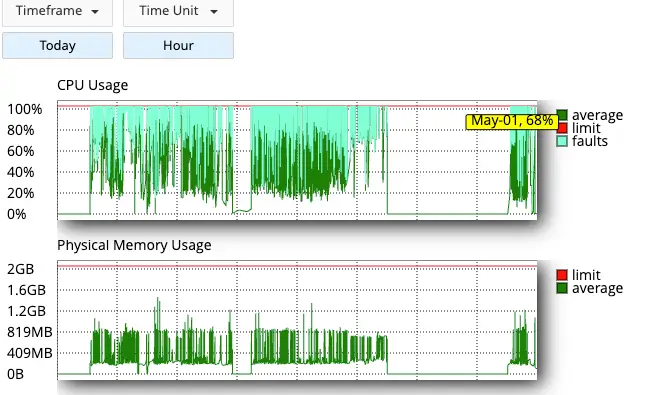

Upon initially noticing the slow site performance, I ran a quick check and noticed unusual spikes in bandwidth usage. This was clearly not normal traffic, and it made me suspect that bot traffic might be the culprit. With the possibility of a bot attack looming, I quickly contacted the hosting provider. Unfortunately, their response was far from helpful. Despite my request to improve security settings and check for any malicious activity, the hosting team did not take meaningful action. Instead, they offered a new cPanel where the same issue will exist.

This lack of support from the hosting provider meant I had to dig deeper into the issue myself.

Digging into the Problem: Spending 3 Days Investigating

Over the course of three days, I decided to spend significant time investigating the root cause of the issue. I combed through the server logs, monitoring traffic and analysing the unusual patterns. After some time, I realised the problem wasn’t a bot attack, but rather a misconfiguration with the WooCommerce theme or and the way it handled product filters.

WooCommerce, being a powerful e-commerce plugin, dynamically generated a large number of product filter URLs with query parameters like filter_cat=, filter_brand=, min_price=, and others. These URLs were being indexed by search engines and crawlers, but they were causing significant bandwidth consumption due to their complex nature.

Most notably, I discovered that crawlers were constantly crawling URLS such as:

-

/shop/?et_columns-count=1&filter_cat=desktop-switches -

/shop/?filter_brand=beldon%2Ctp-link&filter_cat=desktop-switches,unmanaged-poe-switches

These kinds of URLs were never meant to be accessed or indexed by bots, but they were consistently being crawled, leading to unnecessary bandwidth usage.

The Solution: Blocking Unwanted Bot Traffic

Once I realised what was happening, I took the following steps to resolve the issue:

- Blocking Crawlers Using Code Snippets Plugin:

I used the Code Snippets plugin to add custom PHP code to block known bots and crawlers that were unnecessarily consuming bandwidth. I created a script to block crawlers when they accessed URLS with specific query parameters related to filters. - The code checks if the request URL contains query parameters like

filter_cat=,filter_brand=,min_price=, etc., and if a known bot user agent is detected (e.g., Googlebot, Facebook’s external crawler), it returns a 403 Forbidden status to stop them from crawling. The result? The excessive bot traffic was blocked, and the bandwidth usage dramatically dropped. The site speed improved, and the overall user experience returned to normal. - Alternative: Adjusting

robots.txt:

As a backup, I updated the robots.txt file to disallow crawlers from accessing URLS that could be harmful to site performance. For instance, I added rules to block crawlers from accessing URLS with product filters and other dynamic parameters robots.txt

Disallow: */?filter_cat=

Disallow: */?min_price=

Disallow: */?max_price=

Disallow: */?orderby=

Disallow: */?stock_status=

Disallow: */?sale_status=

Disallow: */?filter_brand=

Disallow: */?et_columns-count=

How This Helps

- Blocks any bot or crawler from hitting those filtered WooCommerce URLS

- Stops wasting bandwidth on thousands of useless, auto-generated filtered pages

- Does not affect real users or normal product pages

Key Takeaways:

- Understand the Problem First: Don’t jump to conclusions. In this case, I initially thought the site was under attack. However, it turned out to be a misconfiguration of product filters in WooCommerce, which led to unnecessary bandwidth consumption.

- Don’t Rely Solely on Hosting Support: My hosting provider didn’t provide much help in this case. Sometimes, it’s better to troubleshoot issues yourself if your provider doesn’t offer adequate support.

- Use Code Snippets for Quick Fixes: The Code Snippets plugin is an excellent tool to add custom code to your WordPress site without messing with theme files or child themes. It allowed me to implement a solution quickly and efficiently.

- Block Crawlers Early: Preventing bots from crawling unnecessary URLS is crucial for maintaining a fast and efficient site. By blocking crawlers from accessing specific query strings, I was able to free up valuable server resources.

Conclusion

In the end, after a frustrating few days of investigation, I was able to resolve the issue of excessive bandwidth consumption on my client’s WooCommerce site caused by bad bots. By blocking unnecessary bot traffic through a custom code solution and updating the robots.txt file, I successfully reduced the load on the server and improved the site’s performance.

If you’re facing similar issues with your WooCommerce site, don’t panic. Sometimes the problem is not as severe as it seems. With the right tools and a bit of patience, you can get your website back on track without needing expensive security services or unreliable hosting support.

If you are facing performance issues with your WooCommerce site or need help blocking unwanted bot traffic, feel free to reach out to us at Web Wizard. Our team of web experts can help optimise your website’s performance and security.